The web exploration industry has been changed forever by the AI Crawlers for the better. These intelligent automation tools, which extract information, can transform complex webs of websites into structured data. This improvement aligns with the training of the best models on the market, such as Gemini by Google and ChatGPT by OpenAI, which utilize AI bot crawlers to gather more data and achieve new heights. Many investments are currently underway in this field, which accelerates progress.

This guide will demonstrate how AI crawlers enhance the industry and explain why. Not only that, but I will also show you real-world scenarios that can help you in your daily work, whether you are a data analyst, marketer, researcher, or a business looking to improve through AI crawling.

By the end of the article, you will have a fantastic comprehension of:

- Why and how is AI transforming the Crawling Industry

- What Makes a Great AI Web Crawler?

- Comparison between the Top 8 AI Crawlers in 2025 and their usage

- Winner of this guide

- How to use AI Crawler in the real world

What is an AI Crawler?

AI Crawling has replaced traditional crawling by utilizing AI features. AI enables traditional algorithms to work more intelligently and quickly. Crawling is a technique that automatically browses the entire internet, searching for connections between websites. During this process, it indexes content from those websites, builds a database for search engines like Google, and trains AI models for SEO purposes. Now that we’re familiar with the basics, let’s explore the role of AI in the crawling industry.

AI Adoption in the Crawler Industry

AI is transforming many industries globally, and when it comes to crawling, it has done the same. There are many subindustries in the AI crawling space, which are stemming now from it. One example is an AI agent crawler, such as GPTBot by OpenAI, which has a primary purpose of crawling the internet for data to train ChatGPT later. GPTBot has achieved global success, with a 305% increase in usage over the past year. OpenAI has other AI bot crawlers, but they are not the only ones.

Every major company nowadays deploys its own custom crawlers to scour the web and bring back valuable data, according to Cloudflare. They also utilize Cloudflare AI crawler in Cloudflare Radar to help users manage AI crawlers and what to include in robots.txt files.

If you thought a 305% increase was significant, Perplexity has an estimated record-high growth rate of 157,490%, and yes, that is in thousands. Now you can see that this is a marathon, and we are looking at some of the most significant changes to come to this industry since the beginning of the Internet.

Whether you are a professional or own a business, these AI crawler tools can help you with data projects, providing control and precision. Let’s focus now on what features stand out for AI crawlers.

What Makes a Great AI Crawler?

Choosing the right AI Crawler is not easy in such a large market, but after testing all of them, I found the key features that make a great AI crawler stand out. Although different tools may serve other purposes, they must include these features as basic to be an AI Crawler.

Key features of AI Crawlers to keep in mind:

- Natural language-driven data crawling: With a simple command and without technical knowledge, users must be able to access all the data they need.

- Automatic anti-bot avoidance: Human-like behavior is now possible with AI as the algorithm adapts to clicking times.

- List and detail page data extraction: Crawling tools should automatically investigate any subpages that are possible for scraping.

- Multipage scrolling and pagination handling: Automatic detection of pagination and navigation to the next page is essential for a great crawler.

- Dynamic content handling: High-end websites frequently update their HTML layout to prevent scraping, but with AI features, it is possible to adapt and overcome this issue.

Top 8 AI Web Crawlers Comparison for 2025

Based on the features above, I would like to present the top 8 AI crawlers on the market, comparing their key features, pros, cons, pricing, and ease of use.

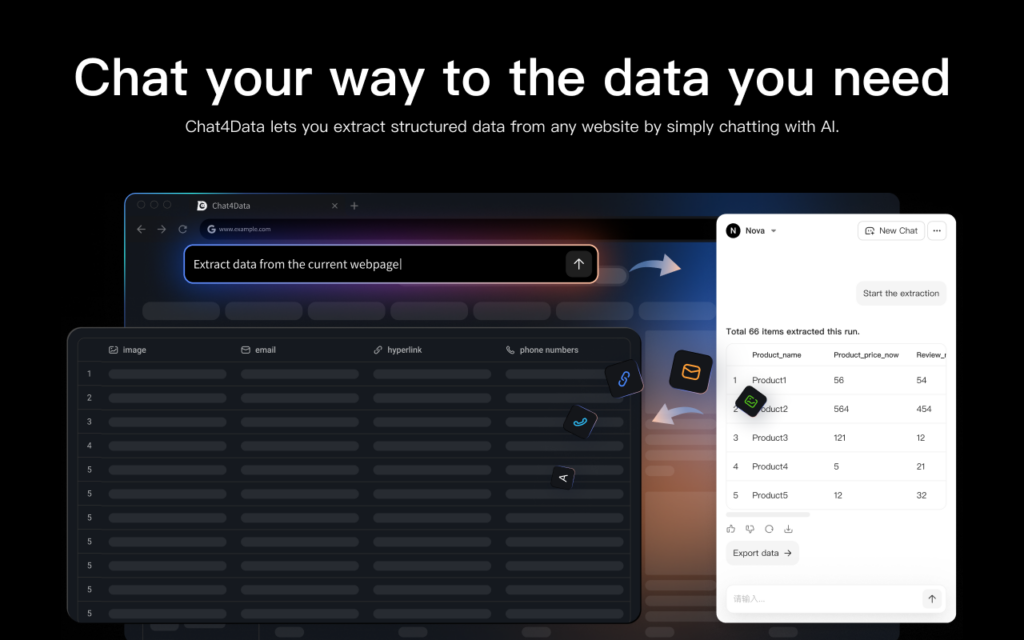

Chat4Data

Chat4Data has the majority of the features we are seeking in a data scraper extension and more. A key differentiator is its use of natural language prompting and AI enhancements for data extraction.

Key Features:

- Natural language prompting involves explaining what data you need in plain language, and Chat4Data does the rest for you.

Pros:

- Ability to scrape list and detail page data — this makes Chat4Data act like an AI crawler and discover additional information.

- Privacy-focused is essential as the tool processes everything locally and can scrape data from websites that require a login.

- Efficient credit usage enables this tool to be utilized for various scraping tasks.

Cons: The user must be in the window where Chat4Data is actively scraping.

Pricing: Freemium – Free starting credits are included, and premium packages start at $7 (2,000 credits) and $24 (8,000 credits) per month.

Ease of use: High – Chat4Data assists my data extraction even when my needs are unclear, offering auto-suggested prompts to guide my scraping strategy. This functionality ensures I can quickly and accurately fetch the exact data I require.

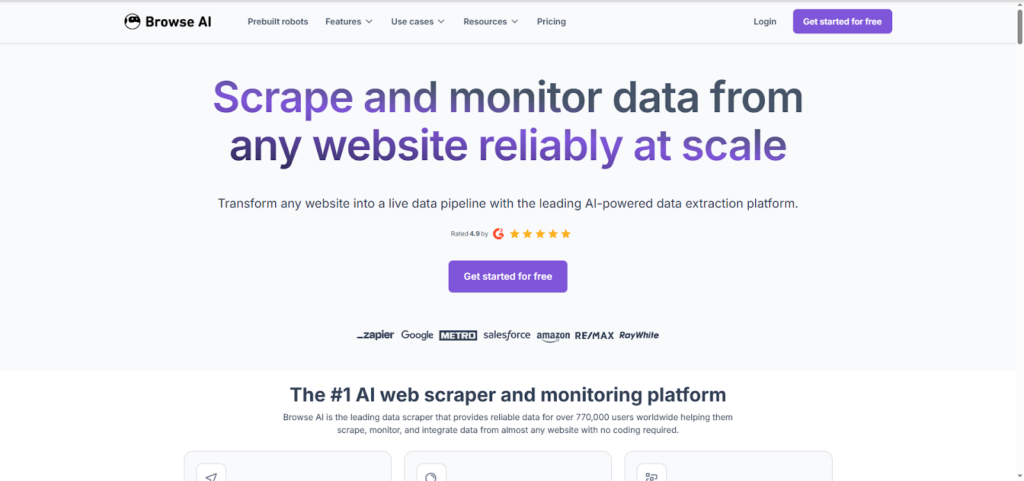

BrowseAI

This platform enables the training of extraction robots to continuously monitor websites and pull data, which is essential for adapting to changes and maintaining constant oversight.

Pros: Simple user interface; monitoring of websites.

Cons: The high level of customization is only available in the premium package, which can become expensive to use.

Pricing: Free for small projects, then $19 – $69, depending on the size of the project, and a premium of 500$ for high customization and priority support.

Ease of use: High – can be used without technical knowledge and is easy to set up.

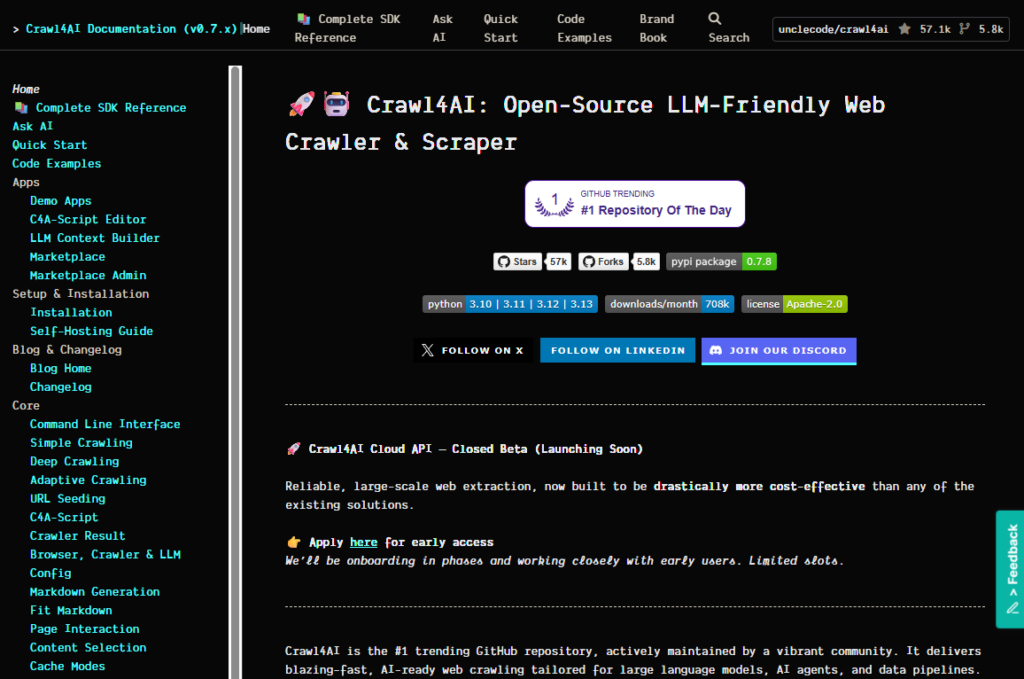

Crawl4AI

One of the hottest open-source tools in the current market, but it is still in beta development. Crawl4AI enables users to execute local models for data crawling and extraction. They offer various crawling styles, ranging from simple to deep and adaptive.

Key Features:

- Crawl Dispatcher, as it is called, is a module that can simultaneously crawl tasks, up to thousands at the same time.

- Adaptive crawling introduces the element of intelligence to traditional crawling, allowing it to understand context and crawl only the right amount, rather than too much or too little.

- Open-source and free.

Pros:

- Advanced options and full control over what you are doing with the algorithms, model selection, and final crawl result.

Cons: The Cloud API is still in limited access. Self-hosting requires resources and setup.

Pricing: Free.

Ease of use: Low. Requires a significant amount of setup time, technical expertise, and resources.

Apify

A cloud-based platform for building, running, and scaling web scraping and automation “actors” (apps). It has a vast marketplace of pre-built scrapers and automation tools.

Key Features:

- Handling complex workflows is where this tool shines.

Pros:

- Running tasks in parallel.

- Scales well for large projects.

Cons: The steep learning curve makes it more challenging to get started at first, and costs can quickly escalate.

Pricing: Freemium. Flexible plans and paying as you go, starting at $39 for a premium package, up to $999 for businesses.

Ease of use: Medium to High. Complex tasks can be challenging to configure correctly, but for generalized projects, it works great.

Bright Data

Bright Data’s Crawl API is designed to automate the extraction and mapping of content from entire websites. It can capture both static and dynamic content, delivering data in formats like Markdown, HTML, Text, and JSON.

Key Features:

- Maps the entire website structure in a single request.

- Captures both static and dynamic web content.

Pros:

- Flexible SEO, AI, and compliance needs.

- Scalable infrastructure for large projects.

Cons: Works in an API style, so it can present a steeper learning curve, even for experienced developers.

Pricing: Premium. 1k records cost between $1.5 and $0.75, depending on the quantity you require.

Ease of use: Medium. It is harder to start with, but it can be a great scaling tool.

Firecrawl

An API-first service built to turn any website into clean, structured data (Markdown or JSON) for LLMs and AI applications.

Key Features:

- Automatically handles sitemap discovery and recursive crawling.

Pros:

- Exceptional Speed & Coverage: Handle JavaScript-heavy sites without manual proxy management.

- LLM-Ready Output: Purpose-built to deliver data in perfect formats for RAG (Retrieval-Augmented Generation) systems.

Cons: Requires technical knowledge as it is a buildable API. Developers are the primary user group in this context.

Pricing: Freemium. From $16 to $333, depending on the number of pages to process.

Ease of use: Great for developers but less so for non-technical users.

Diffbot

Utilizes computer vision and natural language processing to “see” and understand web pages like a human. It offers pre-trained models to automatically extract specific entity types (Articles, Products, People, Discussions, etc.) without configuration.

Key Features:

- Unmatched Accuracy: For its core entity types (e.g., news articles, product pages), its AI-driven extraction is highly accurate and consistent.

- Zero Configuration: Requires no training or rules for standard page types; just send a URL.

Pros:

- Knowledge Graph: Extracted data can populate a private or public knowledge graph, enabling powerful semantic search.

Cons: One of the more expensive options on the market.

Pricing: Freemium. Starting with a free option for trying out, premium packages can be expensive, ranging from $299 to $899 per month.

Ease of use: High to Medium. Suitable for the intended websites, but can get quite complex for custom solutions.

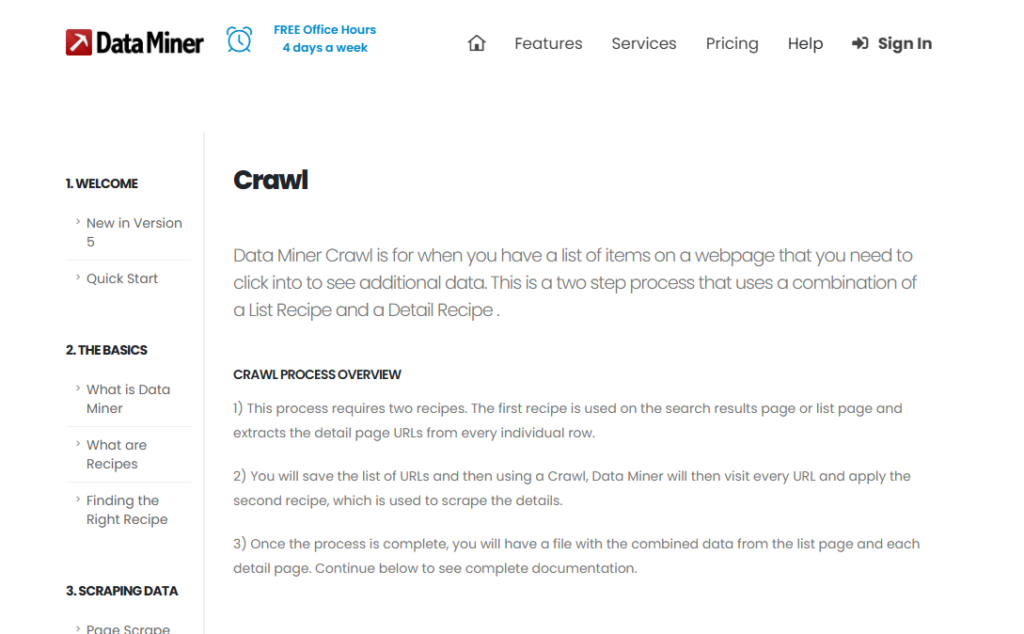

Data Miner

Data Miner is a scraping and crawling tool that works based on recipes. A recipe is a formula that a data miner follows as a set of rules to find the desired data.

Key Features:

- Customizable recipes for scraping and crawling.

- Prebuilt recipes for popular websites.

Pros:

- Configurable crawler with many settings

Cons: Crawling requires some technical knowledge for the correct setup.

Pricing: Freemium. Generous free plan with 500 pages/month for scraping, and premium plans range from $20 to $200, depending on the number of pages to scrape and additional features included.

Ease of use: High to Medium.

Why Do Most Users Choose Chat4Data?

The simplicity and feature palette Chat4Data offers are the best value for your money. It covers all the use cases and various industries. If you are just starting and looking for a no-code solution, or trying to scale, Chat4Data allows for both. Beyond the features that are expected for an AI Crawler tool, Chat4Data provides:

- Privacy-friendly scraping: All processing is done locally, including your login credentials, so Chat4Data does not store any data, prioritizing your data safety.

- Efficient credit usage: For an average request, Chat4Data uses between 7 and 10 credits, allowing you to build any size project at an affordable price of $7.

- Direct browser access: Chat4Data functions as a Chrome extension, which means all the code behind the webpage is accessible to it, allowing for seamless data scraping and crawling.

See here how Chat4Data compares to other Chrome extensions.

Its most significant advantage lies in the natural language prompting, as it lowers the entry bar to a minimum. There is no need to code or install complex tools, just add it as a browser extension and start scraping!

Conclusion

The AI crawler industry is robust and diverse, offering numerous options for selection. From no-code solutions to more advanced production-grade tools for any type of project. The goal here is to enable any professional who wants to conduct research using data to do so now.

By reviewing the comparison checklist above, you can determine the best tool for your use case; however, for most users, Chat4Data is the Preferred Option. It offers powerful yet private AI crawler features that unlock data instantly.

Want a deeper dive? Check out these articles:

- How to Power Up Your Data Collection with AI Scraping

- How to Turn ChatGPT into a Web Scraping Assistant

- Top 8 Best AI Web Scrapers in 2025

FAQs about AI Crawlers

1. Which tool is best for scraping JavaScript-heavy sites?

Tools with full browser automation capabilities are best at handling JavaScript-heavy websites. Chat4Data reliably deals with JavaScript-heavy websites. Chat4Data has direct access to the browser page you are scraping and can easily go through the JS code.

2. Which crawlers support local model execution?

Crawl4AI gives you complete control to run any local model for crawling. They are open-source, but they require resources and knowledge of setup. Most other tools in the AI crawler market are SaaS, based tools.

3. Which tools include unlocking or anti-blocking features?

Chat4Data, Octoparse, and BrowseAI have strong anti-blocking features that make scraping feel like a human interaction. In this manner, they avoid any detection so that random delays and natural patterns are not recognized by the websites you are scraping.

4. How do pricing tiers compare across these crawlers?

Pricing varies across companies, ranging from Free (for small projects) to $10-$50/month for higher usage, and finally $100+ for business packages. Chat4Data offers the most affordable plan at $7 for 2000 credits, and with efficient usage, you can utilize this for large-scale projects.

5. Which crawlers are easiest to integrate with Python?

Firecrawl and Crawl4AI offer dedicated SDKs (Software Development Kits) that are directly available for installation as Python libraries. Other tools can also integrate through the REST API by using the Requests library in Python.